I’ve been on holiday this week. So when an email came through from a friend, pointing me to the CNN report on this paper, I read the title and scanned the first couple of paragraphs, and filed it away for later reading.

I did keep thinking about it though, especially while sitting alone in the onsen after a day in the snow. I have had in mind that I am giving another rendition of the talk that started this blog next week, in the Department of Physics and Astronomy at the University of Canterbury. So I was sitting, soaking, and thinking about what it might mean, because I have always worried, from the first time I put a summary of the evidence together, that I might easily be accused of cherry picking the data. Without a research background in psychology or social science, I think this is a sensible thing for me to worry about.

So how could a study reporting favoritism towards women in science be reconciled with the previous studies I had read? The conclusion I came to, sitting in the sulphurous hot water and staring at the birch trees that framed my view of the sky and snow, was simply this: any study that looks into the issues of women in science is one that I welcome, even (especially) if it challenges the conclusions I have previously come to. I may not have a background in social science, but working in the physical sciences is not a bad place to learn about the many complexities that can arise in an apparently simple system.

People are not a simple system.

So: before having read the original study, and only based on the CNN summary, I had framed a few possible hypotheses in my mind. These run as follows:

1) CVs of tenure-track candidates are being evaluated without the gender bias that was demonstrated in the study of Moss-Racusin, where the applicants were for jobs that did not require a PhD. Therefore perhaps there is a difference in the effective gender bias at these different career stages.

2) The extent to which the issues of women in science have been publicised in the last few years, based on the Moss-Racusin study, the study of attitudes towards the concept of innate talent, and a range of other pieces of research – oh, and a whole issue of Nature devoted to the problem – has led to a change in awareness, and therefore in behaviour (which is not necessarily the same as suggesting that unconscious biases have changed in any measurable way, which a lot of literature would suggest is much much harder).

Hypothesis 1) is pretty well ruled out by the consistency between the Moss-Racusin study and numerous other studies that show gender bias in the evaluation of CVs for a range of jobs, across different disciplines, fields, and levels of seniority. A secondary version of this hypothesis might still be valid: that there is something special about academic tenure track science positions. This, however, can easily be turned into a version of hypothesis 2): perhaps the attention given to the issues of women in science has caused widespread recognition within the academic community, and, we may hope, scientists are willing to work towards countering their biases.

This would be the positive way of framing my reaction 1.0: at least, this hope that discussion and awareness would lead to positive change was the major reason for my putting this talk/blog together in the first place. So I’d like to take the results of the current study in that spirit.

However – having had a little time now, to read the original research paper, I think I need to add a little more to this: so what follows here is my reaction 2.0.

A great summary of some of the technical issues with the paper is here, and I won’t reiterate the points that it makes so well other than to say that I would not be quick to condemn any piece of research for weaknesses in methodology, so long as such weaknesses are acknowledged in the paper itself, and taken into account in the formulation of its conclusions.

A particular point, that I would like to focus on here, is the authors account of their motivations in doing this study. As they say themselves:

These results suggest it is a propitious time for women launching careers in academic science. Messages to the contrary may discourage women from applying for STEM (science, technology, engineering, mathematics) tenure-track assistant professorships.

Now I am very sympathetic to the implied discomfort with continued negative messages around the issues of women in science. I have always been very careful to insist that the biases and sexism are really only assessable in a statistically meaningful sample; to worry about the impact of these (mostly rather small) factors on an individual career is nonsensical, not to mention unhealthy. It also misses the importance of intersectionality by trying to reduce analysis of what is and isn’t fair to a single dimension – life is not always fair, and to a large extent, those of us who do well in academia do so because we have had the luck to have been well supported – by mentors, family, and friends – at crucial moments in our careers. It does come down to luck – and while you can certainly make your own luck at times, I’d argue that that’s a necessary but not sufficient condition for success.

So yes: gender imbalance in science exists, but the sexism that we have to deal with is structural; I don’t believe I have seen any indication that direct sexism exists in academia at greater levels than in society generally (though it certainly does exist). Talking about gender imbalance, honestly and openly, is, I genuinely believe, better than trying to conceal the truth under a coating of ‘nothing to see here, it’s all fine’.

And this is the irony in the current PNAS article. The results most likely suggest, in contrast to the authors conclusions, that talking about gender imbalance and unconscious bias has made a difference in peoples attitudes. At least to the attitudes of the 30% of academics who would opt to participate in such a study.

I say attitudes only, and not behaviours, because of the nature of the study: assessing candidates for assistant professorships on the basis of individual ‘narratives’ – not even CVs – is a long way from a real hiring scenario, and one in which the goals of the study are likely to be highly transparent. Participants may well feel freer that they would in a non-hypothetical scenario to make the decision that they think is the right one – in a world in which gender bias is apparent.

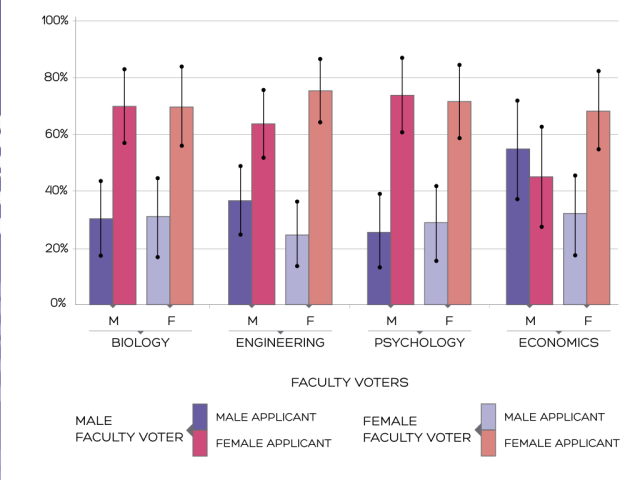

I’m not even going to comment on the fact that it’s only the male economists that show no preference for female candidates, in this scenario – whether it is the awareness or the will that is lacking, I am not qualified to judge.

Finally, I will take issue with the conclusions that the authors come to:

Our data suggest it is an auspicious time to be a talented woman launching a STEM tenure-track academic career, contrary to findings from earlier investigations alleging bias

The data show considerably stronger bias than even in the Moss-Racusin study, just pointing in the opposite direction! The last thing that this article shows is that sexism is dead. It does, however, provide an interesting demonstration of how querying and qualifying experiences of gender bias – even if done for the best of reasons – can lead to an amplification of the original sexism.

We hope the discovery of an overall 2:1 preference for hiring women over otherwise identical men will help counter self-handicapping and opting-out by talented women at the point of entry to the STEM professoriate

As opposed to just giving ammunition to those who have been pushing back against efforts to get women into science by alleging ‘you only got the job because you’re a woman’?

Way to increase the damage done by imposter syndrome, guys.

[This below comment is a response to Dr. Zuleyka Zevallos’s critique of the PNAS study on STEM faculty hiring bias by Wendy Williams and Stephen Ceci. http://othersociologist.com/2015/04/16/myth-about-women-in-science/%5D

Zuleyka, thank you for your engaging and well researched perspective. On Twitter, you mentioned that you were interested in my take on the study’s methods. So here are my thoughts.

I’ll respond to your methodological critiques point-by-point in the same order as you: (a) self-selection bias is a concern, (b) raters likely suspected study’s purpose, and (c) study did not simulate the real world. Have I missed anything? If so, let me know. Then I’ll also discuss the rigor of the peer review process.

As a forewarning to readers, the first half of this comment may come across as a boring methods discussion. However, the second half talks a little bit about the relevant players in this story and how the story has unfolded over time. Hence, the second half of this comment may interest a broader readership than the first half. But nevertheless, let’s dig into the methods.

(a) WAS SELF-SELECTION A CONCERN?

You note how emails were sent out to 2,090 professors in the first three of five experiments, of which 711 provided data yielding a response rate of 34%. You also note a control experiment involving psychology professors that aimed to assess self-selection bias.

You critique this control experiment because, “including psychology as a control is not a true reflection of gender bias in broader STEM fields.” Would that experiment have been better if it incorporated other STEM fields? Sure.

But there’s other data that also speak to this issue. Analyses reported in the Supporting Information found that respondents and nonrespondents were similar “in terms of their gender, rank, and discipline.” And that finding held true across all four sampled STEM fields, not just psychology.

The authors note this type of analysis “has often been the only validation check researchers have utilized in experimental email surveys.” And often such analyses aren’t even done in many studies. Hence, the control experiment with psychology was their attempt to improve prior methodological approaches and was only one part of their strategy for assessing self-selection bias.

(b) DID RATERS GUESS THE STUDY’S PURPOSE?

You noted that, for faculty raters, “it is very easy to see from their study design that the researchers were examining gender bias in hiring.” I agree this might be a potential concern.

But they did have data addressing that issue. As noted in the Supporting Information, “when a subset of 30 respondents was asked to guess the hypothesis of the study, none suspected it was related to applicant gender.” Many of those surveyed did think the study was about hiring biases for “analytic powerhouses” or “socially-skilled colleagues.” But not about gender biases, specifically. In fact, these descriptors were added to mask the true purpose of the study. And importantly, the gendered descriptors were counter-balanced.

The fifth experiment also addresses this concern by presenting raters with only one applicant. This methodological feature meant that raters couldn’t compare different applicants and then infer that the study was about gender bias. A female preference was still found even in this setup that more closely matched the earlier 2012 PNAS study.

(c) HOW WELL DID THE STUDY SIMULATE THE REAL WORLD?

You note scientists hire based on CVs, not short narratives. Do the results extend to evaluation of CVs?

There’s some evidence they do. From Experiment 4.

In that experiment, 35 engineering professors favored women by 3-to-1.

Could the evidence for CV evaluation be strengthened? Absolutely. With the right resources (time; money), any empirical evidence can be strengthened. That experiment with CVs could have sampled more faculty or other fields of study. But let’s also consider that this study had 5 experiments involving 873 participants, which took three years for data collection.

Now let’s contrast the resources invested in the widely reported 2012 PNAS study. That study had 1 experiment involving 127 participants, which took two months for data collection. In other words, this current PNAS study invested more resources than the earlier one by almost 7:1 for number of participants and over 18:1 for time collecting data. The current PNAS study also replicated its findings across five experiments, whereas the earlier study had no replication experiment.

My point is this: the available data show that the results for narrative summaries extend to CVs. Evidence for the CV results could be strengthened, but that involves substantial time and effort. Perhaps the results don’t extend to evaluation of CVs in, say, biology. But we have no particular reason to suspect that.

You raise a valuable point, though, that we should be cautious about generalizing from studies of hypothetical scenarios to real-world outcomes. So what do the real-world data show?

Scientists prefer *actual* female tenure-track applicants too. As I’ve noted elsewhere, “the proportion of women among tenure-track applicants increased substantially as jobseekers advanced through the process from applying to receiving job offers.”

https://theconversation.com/some-good-news-about-hiring-women-in-stem-doesnt-erase-sex-bias-issue-40212

This real-world preference for female applicants may come as a surprise to some. You wouldn’t learn about these real-world data by reading the introduction or discussion sections of the 2012 PNAS study, for instance.

That paper’s introduction section does acknowledge a scholarly debate about gender bias. But it doesn’t discuss the data that surround the debate. The discussion section makes one very brief reference to correlational data, but is silent beyond that.

Feeling somewhat unsatisfied with the lack of discussion, I was eager to hear what those authors had to say about those real-world data in more depth. So I talked with that study’s lead author, Corinne Moss-Racusin, in person after her talk at a social psychology conference in 2013.

She acknowledged knowing about those real-world data, but quickly dismissed them as correlational. She had a fair point. Correlational data can be ambiguous. These ambiguous interpretations are discussed at length in the Supporting Information for the most recent PNAS paper.

Unfortunately, however, I’ve found that dismissing evidence simply because it’s “correlational” can stunt productive discussion. In one instance, an academic journal declined to even send a manuscript of mine out for peer review “due to the strictly correlational nature of the data.” No specific concerns were mentioned, other than the study being merely “correlational.”

Moss-Racusin’s most recent paper on gender bias pretends that a scholarly debate doesn’t even exist. Her most recent paper cites an earlier paper by Ceci and Williams, but only to say that “among other factors (Ceci & Williams, 2011), gender bias may play a role in constraining women’s STEM opportunities.”

dx.doi.org/10.1177/0361684314565777

Failing to acknowledge this debate prevents newcomers to this conversation from learning about the real-world, “correlational” data. All data points should be discussed, including both the earlier and new PNAS studies on gender bias. The real-world data, no doubt, have ambiguity attached to them. But they deserve discussion nevertheless.

WAS THE PEER REVIEW PROCESS RIGOROUS?

Peer review is a cornerstone of producing valid science. But was the peer review process rigorous in this case? I have some knowledge on that.

I’ve talked at some length with two of the seven anonymous peer reviewers for this study. Both of them are extremely well respected scholars in my field (psychology), but had very different takes on the study and its methods.

One reviewer embraced the study, while the other said to reject it. This is common in peer review. The reviewer recommending rejection echoed your concern that raters might guess the purpose of the study if they saw two men and one woman as applicants.

You know what Williams and Ceci did to address that concern? They did another study.

Enter data, stage Experiment 5.

That experiment more closely resembled the earlier 2012 PNAS paper and still found similar results by presenting only one applicant to each rater. These new data definitely did help assuage the critical reviewer’s concerns.

That reviewer still has a few other concerns. For instance, the reviewer noted the importance of “true” audit studies, like Shelley Correll’s excellent work on motherhood discrimination. However, a “true” audit study might be impossible for the tenure-track hiring context because of the small size of academia.

The PNAS study was notable for having seven reviewers because the norm is two. The earlier 2012 PNAS study had two reviewers. I’ve reviewed for PNAS myself (not on a gender bias study). The journal published that study with only myself and one other scholar as the peer reviewers. The journal’s website even notes that having two reviewers is common at PNAS.

http://www.pnas.org/site/authors/guidelines.xhtml

So having seven reviewers is extremely uncommon. My guess is that the journal’s editorial board knew that the results would be controversial and therefore took heroic efforts to protect the reputation of the journal. PNAS has come under fire by multiple scientists who repeatedly criticize the journal for letting studies simply “slip by” and get published because of an old boy’s network.

The editorial board probably knew that would be a concern for this current study, regardless of the study’s actual methodological strengths. This suspicion is further supported by some other facts about the study’s review process.

External statisticians evaluated the data analyses, for instance. This is not common. Quoting from the Supporting Information, “an independent statistician requested these raw data through a third party associated with the peer review process in order to replicate the results. His analyses did in fact replicate these findings using R rather than the SAS we used.”

Now I embrace methodological scrutiny in the peer review process. Frankly, I’m disappointed when I get peer reviews back and all I get is “methods were great.” I want people to critique my work! Critique helps improve it. But the scrutiny given to this study seems extreme, especially considering all the authors did to address the concerns such as collecting data for a fifth experiment.

I plan on independently analyzing the data myself, but I trust the integrity of the analyses based on the information that I’ve read so far.

SO WHAT’S MY OVERALL ASSESSMENT?

Bloggers have brought up valid methodological concerns about the new PNAS paper. I am impressed with the time and effort put into producing detailed posts such as yours. However, my overall assessment is that these methodological concerns are not persuasive in the grand scheme. But other scholars may disagree.

So that’s my take on the methods. I welcome your thoughts in response. I doubt this current study will end debate about sex bias in science. Nor should it. We still have a lot to learn about what contexts might undermine women.

But the current study’s diverse methods and robust results indicate that hiring STEM faculty is likely not one of those contexts.

Disclaimer: Ceci was the editor of a study I recently published in Frontiers in Psychology. I have been in email conversation with Williams and Ceci, but did not send them a draft of this comment before posting. I was not asked by them to write this comment.

dx.doi.org/10.3389/fpsyg.2015.00037

I wouldn’t normally publish a comment that is so clearly aimed elsewhere, and doesn’t seem to actually address the content of my post. But I figure I might as well, as it appears to be trying to be constructive, and because it gives me the opportunity to reiterate: I personally wouldn’t go as far as suggesting that this study should not have been published due to methodological flaws. I do think it is important to acknowledge issues and I think that could have been done much better in the paper itself. Even more significantly, I think that reporting of the paper (and the author’s claims, especially given their stated motivations) was poor. But then, that’s not new or surprising – just a reason to celebrate public post-publication discussion and critique.